Section: New Results

Perception for Plausible Rendering

Perception of Perspective Distortions in Image-Based Rendering

Participants : Peter Vangorp, Christian Richardt, Gaurav Chaurasia, George Drettakis.

|

Image-based rendering (IBR) creates realistic images by enriching simple geometries with photographs, for example by mapping the photograph of a building façade onto a plane. However, as soon as the viewer moves away from the correct viewpoint, the image in the retina becomes distorted, sometimes leading to gross misperceptions of the original geometry. Two hypotheses from vision science state how viewers perceive such image distortions, one claiming that they can compensate for them (and therefore perceive scene geometry reasonably correctly), and one claiming that they cannot compensate (and therefore can perceive rather significant distortions). We modified the latter hypothesis so that it extends to street-level IBR. We then conducted a rigorous experiment that measured the magnitude of perceptual distortions that occur with IBR for façade viewing. We also conducted a rating experiment that assessed the acceptability of the distortions. The results of the two experiments were consistent with one another. They showed that viewers' percepts are indeed distorted, but not as severely as predicted by the modified vision science hypothesis. From our experimental results, we develop a predictive model of distortion for street-level IBR, which we use to provide guidelines for acceptability of virtual views and for capture camera density. We perform a confirmatory study to validate our predictions, and illustrate their use with an application that guides users in IBR navigation to stay in regions where virtual views yield acceptable perceptual distortions (see Figure 5 ).

This work is a collaboration with Emily Cooper and Marty Banks at UC Berkeley, within the associate team CRISP. The paper was accepted as a SIGGRAPH 2013 paper and published in the ACM Transactions on Graphics journal [18] .

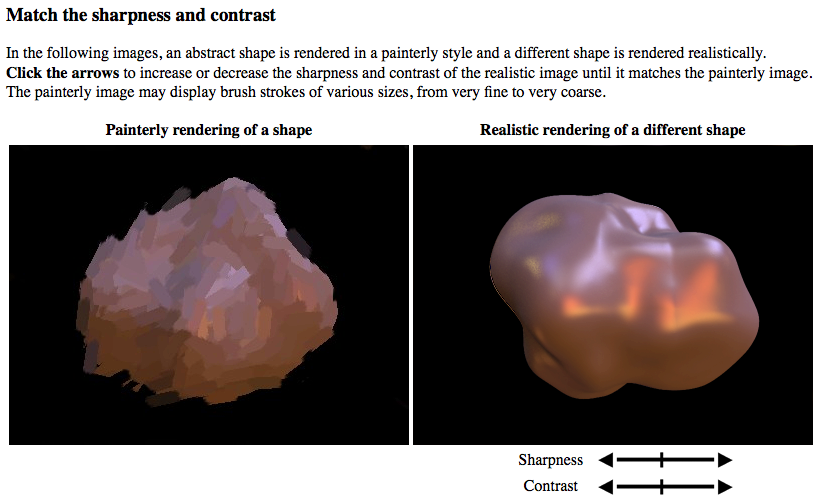

Gloss Perception in Painterly and Cartoon Rendering

Participant : Adrien Bousseau.

Depictions with traditional media such as painting and drawing represent scene content in a stylized manner. It is unclear however how well stylized images depict scene properties like shape, material and lighting. In this project, we use non photorealistic rendering algorithms to evaluate how stylization alters the perception of gloss (see Figure 6 ). Our study reveals a compression of the range of representable gloss in stylized images so that shiny materials appear more diffuse in painterly rendering, while diffuse materials appear shinier in cartoon images.

From our measurements we estimate the function that maps realistic gloss parameters to their perception in a stylized rendering. This mapping allows users of NPR algorithms to predict the perception of gloss in their images. The inverse of this function exaggerates gloss properties to make the contrast between materials in a stylized image more faithful. We have conducted our experiment both in a lab and on a crowdsourcing website. While crowdsourcing allows us to quickly design our pilot study, a lab experiment provides more control on how subjects perform the task. We provide a detailed comparison of the results obtained with the two approaches and discuss their advantages and drawbacks for studies similar to ours.

This work is a collaboration with James O'Shea, Ravi Ramamoorthi and Maneesh Agrawala from UC Berkeley in the context of the Associate Team CRISP (see also Section 1 ) and Frédo Durand from MIT. It has been published in ACM Transactions on Graphics 2013 [11] and presented at SIGGRAPH.

A High-Level Visual Attention Model

Participant : George Drettakis.

The goal of this project is to develop a high-level attention model based on memory schemas and singleton theory in visual perception. We have developed an approach extending a Bayesian approach to attention, which incorporates these high level features and can be directly used in a game engine to improve scene design.

This project is in collaboration with the Tech. University of Crete in the context of the Ph.D. of George Koulieris, supervised by Prof. Katerina Mania and BTU Cottburg (D. Cunningham).